The Revolution of Thought Transmission

Hannes Kaufmann on the present and future of virtual reality, the next leaps in technology, and the limits of human-machine existence.

Hannes Kaufmann is a professor at the Virtual and Augmented Reality Research Unit at TU Wien Informatics. He has been working on virtual reality for 20 years and is an expert on all current developments in the field. We talked to him about the latest VR and AR applications, ethical issues and taboos, and social responsibility.

Professor Kaufmann, what is out there when we enter the virtual world? Can you give us an overview of the developments in virtual/augmented reality?

Hannes Kaufmann: With pleasure. To start off with: We grasp the world, including the virtual world, with our senses. The key sense for this is the sense of sight. It is the most dominant sense and can influence all other senses, as I will explain in a moment. At present, we use glasses for this purpose, i.e. we see virtual content in addition to reality with the help of glasses. More research is already being done on contact lenses: In this case, the three-dimensional content is shown directly to the eye. This development is still at an early stage. The electronic system of the lens close to the eye causes problems because heat is generated in this kind of circuit. The heat goes directly into the eye which is critical from a medical point of view.

For hearing, or the auditory sense, advanced techniques such as bone sound devices are used in addition to headphones and speaker systems. These are loudspeakers placed behind the ear that transmit sound through bones into the auditory canal. This allows trainees to hear the environment and simultaneously perceive sounds from the virtual world, especially in training scenarios.

The senses of smell, taste, and touch, or haptics, play a role as well. Regarding the sense of touch, there are already some developments on the market that address our fingertips: In force feedback, pens are used: For example, if I virtually hit a virtual sphere or surface, I can only move the pen on this surface, my hands are being pressed back there. At best, it gives the impression of stroking a pen across a spherical surface. You can only feel these things on your fingers, but the sense of touch is much more complex: The majority of our sensory receptors are on the palms of our hands. There are even maps of the distribution of nerve cells on our body that sense touch. Most of them are located on the hands and face.

There is a lot of research being done on how to address the rest of the hand. We’re doing research on this right now in a project where we’re using a mobile robot on a moving platform. It moves along like a personal assistant whenever I want to touch something in the virtual world. For example, if I want to touch a wall, the robot arm holds a piece of wall out to me. When I virtually walk to this wall, I feel this virtual wall. When I move my hand further, the robot moves the piece of wall with it. This gives me the real feeling of a wall. So what we do is make people believe that it is a real wall.

Are these technologies already in use and is the robot able to move in real time – just as fast as we do?

HK: That’s exactly what we’re working on right now. Nobody has done this before. We are in the second year of this research, but a lot of work needs to be done still. We’re not there yet, and we don’t know exactly how it feels to have a board like this moving along with your hand. It’s still hard to estimate when we will breakthrough. Our goal is to create a realistic impression of touching virtual content so that users think these virtual things are present in reality.

The haptic illusion – when concrete turns into velvet

What I find interesting is how we can deceive people. The moving robot I just told you about is one example, another is the haptic illusion. Experiments in VR prove that the visual sense always dominates the tactile sense, as I mentioned earlier. For example, I can give you a cylinder, but show you a curved vase. What happens? You feel the cylinder, but recognize a vase – because that’s what you see.

Colleagues in Berlin told us that this is how they made a guest believe a room was lined with velvet. The next time this person visited, they asked about the velvet wall – and were completely surprised to find that it was just bare concrete walls. The visual sense, by the way, can also dominate the auditory sense.

Smelling is tasting

About smell and taste: Taste can be synthesized. We can address the five taste sensors located on our tongue: sweet, salty, sour, bitter and umami. A colleague from England has developed a device with these five tastes. This way, he can synthetically produce different tastes. He let the machine analyze rooibos tea and managed to use it to synthetically produce a very similar taste which the test subjects were not able to distinguish from the real rooibos tea. Let’s move on to another component: Taste is 80 percent smell. In other words, what you smell is crucial for the perception of taste. However, odor is much more difficult to synthesize because an odor consists of thousands of molecules. We can already buy strawberry or forest smells in flacons, but it gets difficult with more complex smells as the decisive molecules for a certain smell can’t be put together that easily. For example, the smells of certain rooms are extremely complex. Let’s think about our Audimax – smells also change when people are in it. So that’s about the state of research on the senses.

How long do you think it will be before we can buy devices like the VR contact lens or before differentiated smells can be produced and used?

HK: It always depends on how much hardware you want to have on you. Currently, we still have clunky devices. The goal, after all, is for everyone to be able to wear glasses in an easy way: They should be lightweight and not cause any problems. The miniaturization of technology is still a long way. Development is ongoing, but it is difficult to predict technological leaps. It can happen very quickly, but it can also take longer.

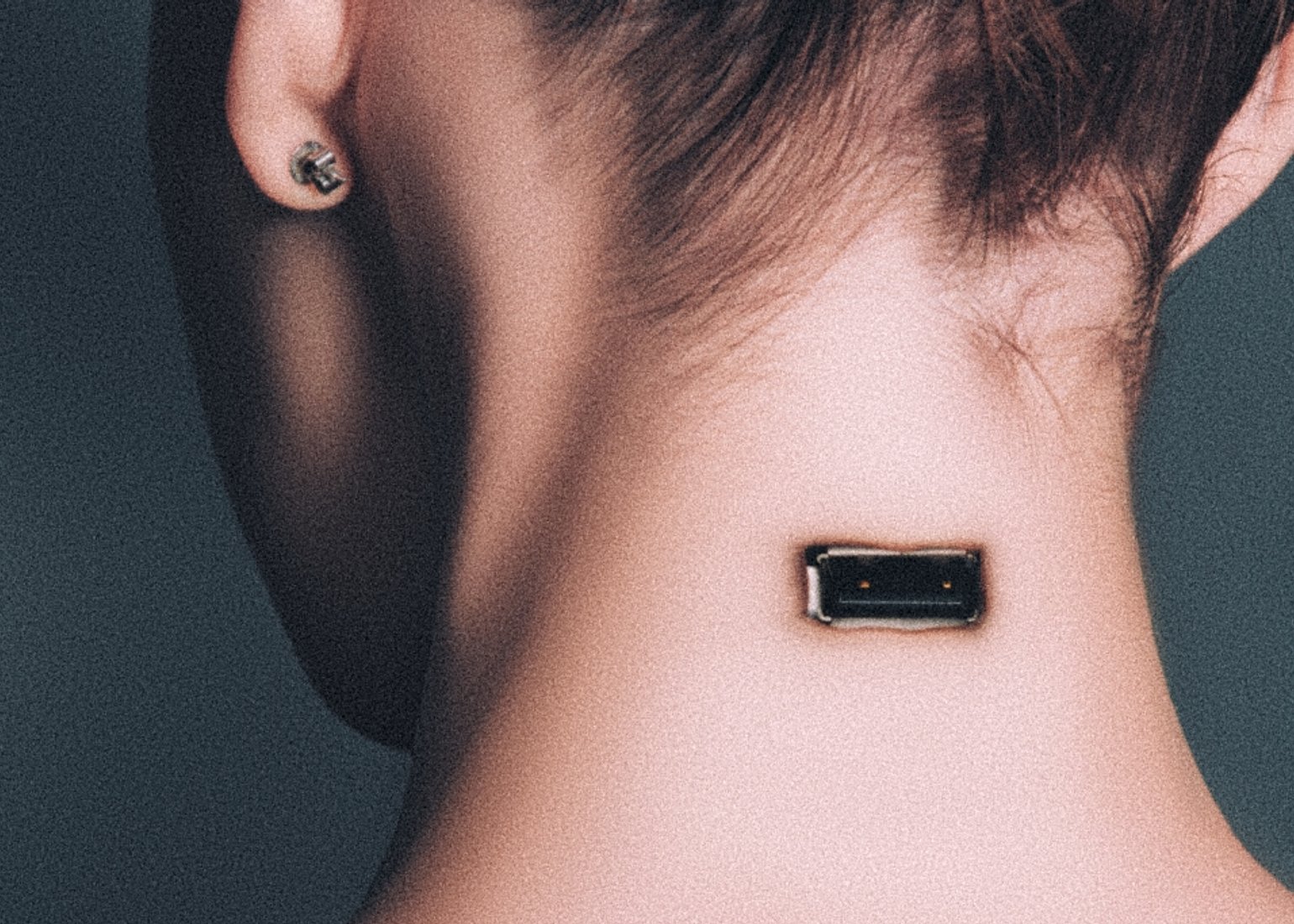

We, the cyborgs

As you have very graphically described, we are bringing technology closer and closer to the body, or it is already in the body. To what extent are we already cyborgs or transhumans?

HK: A lot of people already perceive the mobile phone as an augmented sensor. It is also an individual question of how much technology one wants to accept in the body or on the body. This will change over time – once there are implants or applications on the skin that people find useful, I can imagine that they will also become more socially accepted.

To me, the term cyborg is defined by implanted technology. Implants cause medical problems (acceptance of the foreign object), but also dependence on technology, on power, wireless network connectivity, and so on. In the worst case, they allow control of our bodies from the outside. None of this seems desirable to me.

What is your opinion on all these possibilities of self-optimization or even experimenting with technology on and in the body?

HK: I think that everything that helps medically is good and useful: e.g. pacemakers, dialysis for children or diabetes implants that release certain amounts of insulin, even Parkinson’s patients can be helped by an implant that regulates their tremors through electrical impulses.

It’s also essential that people can decide for themselves. I’m skeptical when it comes to gimmicks – with one exception [laughs]: I wish I could see for two kilometers with my glasses. That would make a lot more sense than wearing glasses that only compensate for my poor eyesight!

This aspect is also what interests artists and the transhumanism movement. E.g. the artist Neil Harbisson who can perceive colors as sounds with his cyborg eye. He has also founded a Cyborg Foundation which represents the interests of cyborgs (which he sees himself as). He advocates using technology to gain additional abilities and expand his sensory perceptions this way.

Revolution Thought Transmission

What do you think will be the next big development that right now is still science fiction?

HK: I think the next big revolution will be thought analysis and transmission. With advances in signal analysis – deep learning – decoding brain signals might be the next research field. This will definitely require implants, meaning the body boundary will have to be crossed if we want to pick up brain impulses, because they are severely diminished and disrupted by our skull bones and hair. If we want to analyze and record specific brain regions, we need implanted sensors. There is research that attaches electrodes to the brain with the goal of reading signals and using them to control devices. Here, medical applications have priority – for completely paralyzed patients who are in a coma for example. Such applications already exist, but communication is very slow – patients can spell a maximum of 50–90 characters per minute.

I don’t think this technology will work without implants in the next 50 years, nor will people have electrodes implanted in their brains in the near future. So the issue here is that a device has not only to be attached to the head – it has to actually be inside the head to work. The risks here are great; medically, such an intervention is difficult to justify. And last but not least, there are ethical issues to discuss. First steps in the medical field were made. But as far as I know, there are still no methods for recognizing and decoding more complex thoughts or wishes. Because they are networked differently, i.e. they are stored in different regions of the brain, and there are strong individual differences.

I also doubt whether such a development is desirable. But it’s always like this: All that is technically possible will be done. But we should always consider the purpose in all this, because once realized, developments are difficult to control or reverse. For me personally, technology has to make sense: When it helps people and has real additional value. Unfortunately, many things are produced today only because they can be sold and not because they make sense.

When I think about gamers, I’m sure that some would have technology implanted so that they can react faster in the game. I don’t think that’s right or ethical. But I’m sure it will happen. However, I advocate remaining cautious and making technological additions only outside our bodies. When it comes to the human body, we have to be very careful.

You are advocating a reflection process before major technological changes are implemented?

HK: Yes! I am aware that developments are difficult to assess, including how social acceptance will develop and what time frames are needed. But we have already seen that the consequences of our technological development have not been considered, also politically. Unfortunately, political decisions are always made when it is far too late. We saw this with the Internet boom of the 1990s. Consequently, major social problems came up – if we think of mobbing in social networks, hate postings and other things. We should have thought about legal restrictions early on. Major providers have to control the content on their platforms only now.

I know these developments are hard to predict, but that is precisely why policymakers need to engage more intensively with technology assessment.

This interview was conducted as part of TUW Magazine’s “Science Fiction” issue.

Hannes Kaufmann

Hannes Kaufmann is a professor at TU Wien Informatics for Virtual and Augmented Reality and is head of the same research unit. His focus areas include mobile computing, 3D user interface design, mixed reality education, VR, motion and tracking, and augmented reality.

Curious about our other news? Subscribe to our news feed, calendar, or newsletter, or follow us on social media.