Can Machines Save The World?

We’re challenging the pessimistic narrative on AI. In a thought-provoking symposium hosted by TU Wien and IWM, experts discussed fears, threats, and benefits.

Picture: Johannes Hloch / IWM

The tech narrative has dramatically shifted. The early 2000s heralded an era of optimism, promising a future of faster, more efficient progress and a unified world through the internet. Yet, as major tech firms grew, the sleek surface of this utopia started to show cracks. Rising concerns about data breaches, poor working conditions, and environmental harm surfaced, sparking citizen movements and greater awareness about the dangerous effects of unhinged technology development.

Picture: Johannes Hloch / IWM

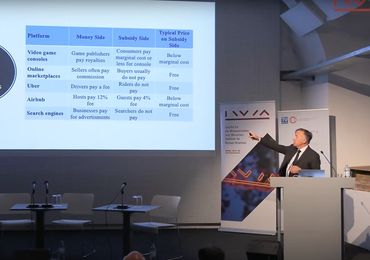

Despite the current hype, Artificial Intelligence (AI) is predominantly met by a doom-and-gloom perspective. Job displacement, privacy erosion, misinformation, economic inequality, and AI warfare are realistic concerns that cannot be downplayed. But how can we challenge these developments and, most importantly, use AI for good? On November 16-17, 2023, TU Wien, together with CAIML and IWM hosted the symposium “Can Machines Save the World?” as part of their Digital Humanism Fellowship Program, with experts from various fields to understand and challenge the pessimistic narrative surrounding AI. Former federal president Heinz Fischer opened the event, thanking TU Wien, IWM, and BMK for their continuous efforts in research, awareness building, and support for regulations and policy-making within the Digital Humanism Initiative.

The advent of Digital Humanism marked a turning point in how we address technology and its development. Spurring global discussions on harnessing technology’s benefits while mitigating its risks, “the initiative built an international community of experts from diverse fields, putting Vienna at the center of a critical dialogue about the ethical, social, and human-centric governance of digital technologies,” Michael Wiesmüller (BMK) states.The multitude of responsibilities computer scientists share for a responsible and ethical digital world was emphasized by Dean of TU Wien Informatics Gerti Kappel: “The technical solutions are there. We know how to build systems, design efficient algorithms, or implement fair data principles and can no longer develop technology for its own sake. Computer scientists must think outside the box and cultivate interdisciplinary exchange.”

For two days, 19 internationally renowned speakers and chairs from computer science, mathematics, social sciences, biology, economy, history, and law discussed with participants on- and offline about the connections between humans and machines, their impact on the economy and politics, and the environment. Ludger Hagedorn (IWM), Hannes Werthner(TU Wien Informatics), and Stefan Woltran (CAIML, TU Wien) curated the symposium.

AI is not simply AI

Artificial Intelligence has 70 years of complex history. What we call AI now has nothing in common with the research endeavors 30, 50, or 70 years ago. The term “Artificial Intelligence” was first coined in 1956 by John McCarthy at the Dartmouth Conference, initially to acquire research funding from the Rockefeller Foundation. Yet, the journey of AI began even earlier with pioneers like Alan Turing, who proposed the concept of a machine that could simulate any form of human intelligence. In the early days, AI research was driven by figures like Marvin Minsky and John McCarthy, who envisioned machines capable of human-like reasoning. The field saw early successes in the 1950s and 1960s with the development of programs that could solve algebra problems and prove geometric theorems.

However, the term “AI” wasn’t always popular. Earlier, concepts like “cybernetics” and “automata theory” were more prevalent. These terms focused more on the principles of feedback and control in electronic systems and less on mimicking human cognition. The optimism of AI’s early years led to inflated expectations, which, when unmet, contributed to a so-called AI Winter. This period was marked by a significant reduction in funding and interest in AI research as the limitations of the technology became apparent. AI experienced a resurgence in the 1980s with expert systems, but it was short-lived. The promises of AI – from self-driving cars to human-like intelligence – were unfulfilled, and skepticism grew.

Picture: Johannes Hloch / IWM

The landscape shifted dramatically with the advent of the internet and the explosion of data, alongside advances in computational power. This ushered in a new era of AI, dominated by machine learning and neural networks, which are the foundation of today’s Large Language Models (LLMs) like ChatGPT. While often marketed under the AI umbrella, these LLMs are scientifically distinct from the AI envisioned by early researchers. LLMs are based on pattern recognition and statistical inference rather than the symbolic reasoning and human-like cognition that early AI sought to emulate. They excel in processing and generating human language, but their understanding and reasoning are limited to the patterns found in their training data.

As Thomas Haigh explains, the term “AI” has become a brand – encompassing a wide range of technologies and marketing logic, each with unique capabilities and limitations. What is most concerning is that current AI applications are monopolized by a few international companies, thus limiting our means for research, egalitarian use, and democratic decisions on the technology development.

Similar fears, different threats?

From the invention of the printing press to the latest tech advancements, every major technological leap has been shadowed by a deep-seated fear of losing control. In the 15th century, Gutenberg’s invention revolutionized the spread of knowledge but also incited fears among the ruling class and religious authorities. The ability to mass-produce texts threatened information control, previously the purview of a select few. Fast forward to the Industrial Revolution, and we see similar fears to those now sparked with the generalized use of AI. The advent of machines brought immense economic growth yet also triggered anxieties about job loss and the dehumanization of labor. The Luddites, for instance, famously destroyed machinery they believed threatened their livelihoods.

AI seems to be the latest frontier of this ongoing saga. Yet, the aspiration of a human-like intelligence has changed our relationship with machines. With AI, we increasingly transfer human agency to devices. We believe their actions and predictions are right, risking finding ourselves amidst a self-fulling prophecy about machines and their control over us. This fear is not only about the technology itself but also about those who wield it. Experiences from the past, where control over technology’s operators was lost, have left a lasting impact. The key challenge lies in transforming AI into a public good rather than leaving it predominantly in the hands of private entities. The development and widespread use of platforms like ChatGPT, primarily funded by private sources, highlights the imbalance in AI development, where 90% of funding comes from private investment and a mere 10% from public sources.

As we stand at the beginning of this technological era, we have already witnessed certain lock-ins in AI development that could prove challenging to alter. Helga Nowotny argues that our current approach is rooted in behaviorism — training models to ‘behave’ in desired ways. However, this approach fails to fully address the broader implications of AI on society and the need for more inclusive, diverse, and democratically controlled AI development.

How to reap the benefits of AI

In navigating this complex landscape, ensuring AI’s role as a technological benefit hinges on several key pillars: validation, explainability, regulation, democratic access, and ethical considerations, all interwoven with the need for sustainable development. Users must understand how AI systems arrive at their conclusions; only then, they can be implemented safely and truly follow ethical parameters. This aspect is particularly challenging. It demands a nuanced understanding of morality in a digital context and means to translate our humanistic principles on a technological level.

How can AI be safe? Safety is expected for everyday tech appliances, underscored by rigorous standards and regulations. Just as a well-designed toaster poses no threat when used correctly, AI, governed by comprehensive standards, can operate safely within its intended parameters. The democratization of AI is crucial in ensuring equitable access and representation, avoiding the concentration of power, and exacerbating inequalities. Regulation and fair access to research and funding is the only way to de-monopolize current AI. AI’s sustainability is a paramount concern. From their energy-intensive training processes to their lifecycle management, the impact of the “AI hype” must be addressed to align technological advancement with ecological responsibility.

Transforming AI into a force for good demands a holistic approach, balancing technological innovation with ethical, regulatory, and sustainable practices. In a world where machines are capable to be our greatest allies, it is ultimately human guidance that will harness this potential. Ultimately, we might be able to save the world – together.

About the Digital Humanism Fellowship Program

The Digital Humanism Fellowship Program aims to provide one Senior and two Junior Visiting Fellows per semester with the means to explore the complex interplay of IT and humanity. TU Wien Informatics is an official partner of IWM, integrating the program fellows, their research, and initiatives trans-institutionally. The Center for Artificial Intelligence and Machine Learning (CAIML), co-directed by Stefan Woltran, is the primary organizer at TU Wien Informatics. As part of the program, Senior Fellows at IWM are offered a visiting professorship at TU Wien Informatics. We also support IWM Junior Fellows with faculty resources – such as workspace, infrastructure, and supervision – and deepen our collaboration with IWM in outreach activities. The Digital Humanism fellowship program is funded by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology (BMK).

Visit IWM’s Youtube Channel to re-watch the symposium.