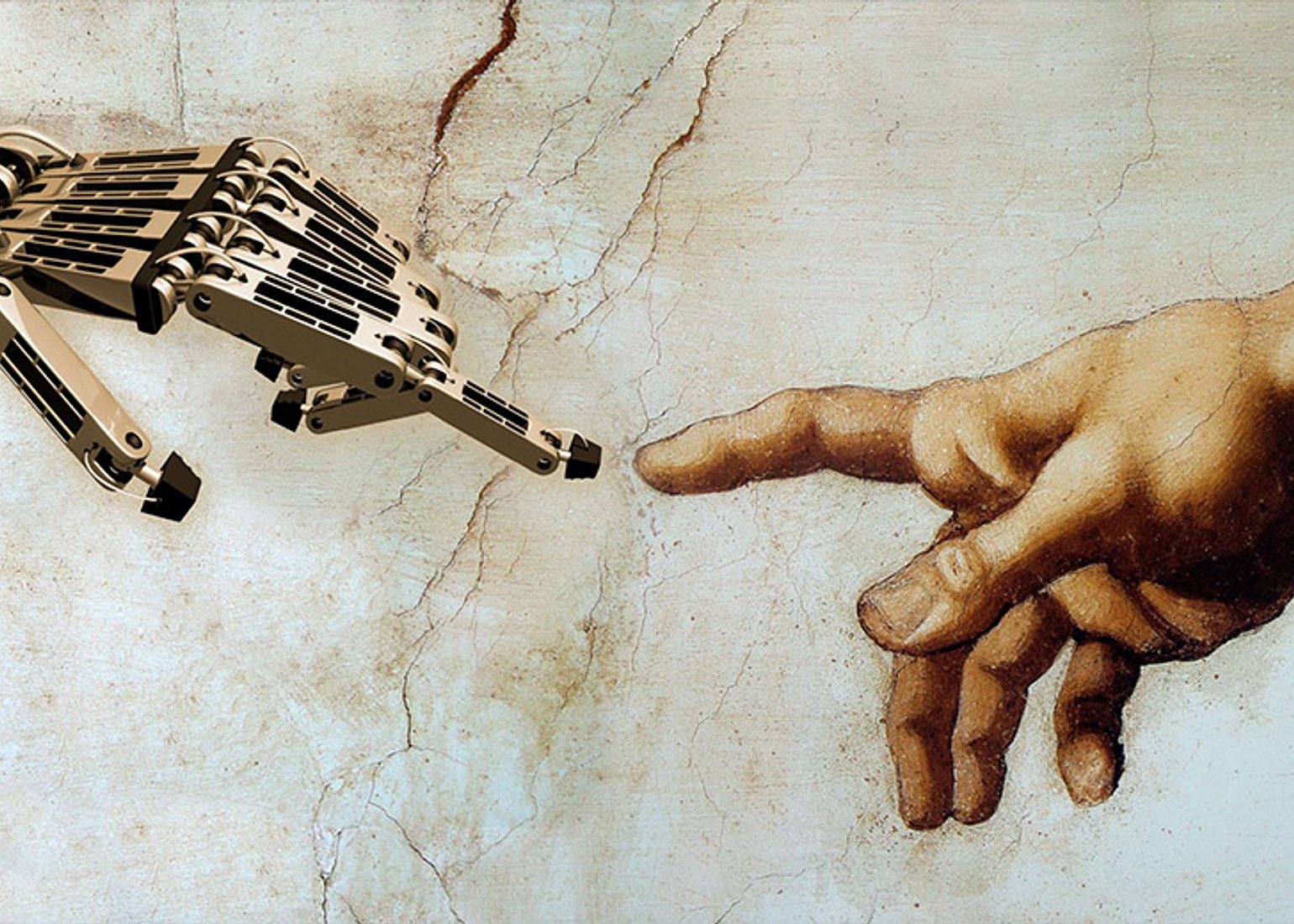

How Not to Destroy the World with Artificial Intelligence

Join our DIGHUM lecture series with Stuart Russell from UC Berkeley presenting his views on this crucial issue.

- –

-

This is an online-only event.

See description for details.

How Not to Destroy the World with Artificial Intelligence

Stuart Russell (University of California, Berkeley, USA)

September 8, 2020

5:00 – 6:00 PM (17:00) CEST

Access

To access the Zoom conference, click here (Password: 0dzqxqiy). All talks will be streamed and recorded on the Digital Humanism YouTube channel. For announcements and slides see the website.

About the Series

Digital humanism deals with the complex relationship between man and machine. It acknowledges the potential of Informatics and IT. At the same time, it points to related apparent threats such as privacy violations, ethical concerns with AI, automation, and loss of jobs, and the ongoing monopolization on the Web. The Corona crisis has shown these two faces of the accelerated digitalization—we are in a crucial moment in time.

For this reason, we started a new initiative—DIGHUM lectures—with regular online events to discuss the different aspects of Digital Humanism. We will have one or more speakers on a specific topic followed by a discussion, or panel discussions, depending on topic and speakers. This crisis does seriously affect our mobility, but it also offers the possibility to participate in events from all over the world—let’s take this chance to meet virtually.

Abstract: How Not to Destroy the World with AI, by Stuart Russell

I will briefly survey recent and expected developments in AI and their implications. Some are enormously positive, while others, such as the development of autonomous weapons and the replacement of humans in economic roles, may be negative. Beyond these, one must expect that AI capabilities will eventually exceed those of humans across a range of real-world-decision making scenarios. Should this be a cause for concern, as Elon Musk, Stephen Hawking, and others have suggested? And, if so, what can we do about it? While some in the mainstream AI community dismiss the issue, I will argue that the problem is real and that the technical aspects of it are solvable if we replace current definitions of AI with a version based on provable benefit to humans.

Curious about our other news? Subscribe to our news feed, calendar, or newsletter, or follow us on social media.