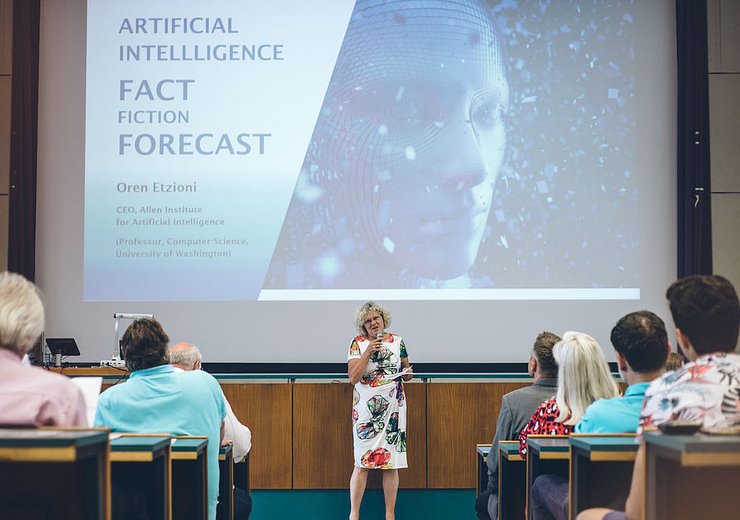

“Artificial Intelligence: Fact, Fiction, and Forecast”

Oren Etzioni pleads for separating science from science fiction and an ethically informed approach to the risks and chances of AI.

About

Dr. Oren Etzioni has served as the CEO of the Allen Institute for Artificial Intelligence since its inception in 2014. He has been a professor at the University of Washington since 1991, and a Venture Partner at the Madrona Venture Group since 2000. He has garnered several awards including Seattle’s Geek of the Year (2013), the Robert Engelmore Memorial Award (2007), the IJCAI Distinguished Paper Award (2005), AAAI Fellow (2003), and a National Young Investigator Award.

Is Artificial Intelligence (AI) an “existential threat to humanity”, as Elon Musk has put it? What kind of impact will AI have on society, jobs, privacy and other items like weapons? In this year’s Gödel Lecture, hosted by TU Wien Informatics on 4 June 2019, Oren Etzioni, CEO of the Seattle-based Allen Institute for Artificial Intelligence and longterm professor at the University of Washington’s Computer Science department, has addressed these issues and pleaded for separating science from science fiction.

In their welcome addresses Rector Sabine Seidler and Hannes Werthner, Dean of the Faculty of Informatics, pointed out the societal impact of informatics and the importance of putting the human at the centre within the profound changes caused by technology. Professor Stefan Szeider introduced world-class scientist Oren Etzioni at this year’s Vienna Gödel Lecture, which is named after the famous Austrian-American logician, mathematician and philosopher Kurt Gödel (1906-1978) and was launched in 2013 for the first time. The lecture series illustrates the fundamental and disruptive contribution of computer science to our information society.

Demon AI?

“AI evokes fear,“ Oren Etzioni explained at the opening of his talk “Artificial Intelligence: Fact, Fiction and Forecast“. Elon Musk for example warns us about the demon AI, who could wipe humanity out, and there is also a discussion about whether Artificial Intelligence is good or evil. Etzioni encouraged the audience to view AI not in moral categories, but to see it as a tool which still needs to be improved: “We really need a more nuanced view on AI. Let’s separate science from science fiction“. He reminded us about the fact that machine learning was to 99 percent human work, and that AI systems are quite narrow - they are intelligent, but they are not autonomous. They cannot generalise, and they are lacking common sense, which he sees as an outstanding human characteristic. By quoting Australian computer scientist Rodney Brooks “If you are worried about the Terminator, just keep the door closed“, Etzioni underlined his point of view.

“Human drivers worry me“

On the contrary, despite the legitimate concerns people have about AI and technologies linked to it, as there are autonomous weapons for example, he thinks AI having a strong potential for being a beneficial technology. The question is “How do we deal with the tools and how can we improve it“. Eric Horvitz from the Microsoft Research Labs and member of the Scientific Advisory Committee of the Allen Institute for Artificial Intelligence stated “It’s the absence of AI technologies that is already killing people“, and Etzioni agrees with that, referring to medical errors caused by tired and overworked medical staff, to give an example. “Human drivers worry me“, he added, and alludes to the high mortality rate due to destructive driving - intelligent cars could save a lot of lives instead.

Regulating AI

According to Oren Etzioni, we should be careful with general regulations, since regulation is a slow process whereas AI is a fast moving field and amorphous. But why shouldn’t we declare a moratorium on AI? The Allen Institute’s mission reads “to contribute to humanity through high-impact AI research and engineering”. Oren Etzioni and his team try taking a result-oriented approach to the complex challenges in AI, and it should be clear enough that AI is neither human nor liable to any consequences. “ ‘My AI did it‘ is not an excuse“, he said, and human operators should be responsible for any harm done by technologies. He also criticised technology violating our privacy, becoming evident in Google’s “the ad knew too much“-option. AI was also not supposed to retain or disclose confidential information without approval of the source, Etzioni said. But still: “AI is not good or evil, it is a tool, a technology - and the choice is ours!“

Curious about our other news? Subscribe to our news feed, calendar, or newsletter, or follow us on social media.